What is shopwithdice.com?

Since 2017, when I first started working with AI and what we used to call it back then “chatbots”, I’ve had this vision of a world where fashion girlies can find the latest and greatest by simply explaining what they want through natural language. No keyword searches on Google, no going door-to-door, no spending hours on e-commerce sites, just explaining to an app what you want and magically the right thing is found.

Fast forward to today, 2025, we’re still trying to solve this problem. In fact, I’m still trying to solve this problem, only it’s at Google now, and the problem feels much bigger. Inventory online and offline continues to grow, the barrier to entry for new brands and designers continues to shrink, and overall, the way we search for products has not really been reinvented aside from the fact that influencers are now the best option for filtering through all that noise.

At the end of the day, we’re all just hoping LLMs and whatever subsequent technologies come along can solve this age-old problem of helping people find the right stuff.

Anyways, back to 2017 when I first had my idea for Dice. I look back on this now and wonder if I was just ahead of my time because it feels even more relevant today than it was back then.

So what was it?

Dice, named after the act of “rolling the dice'“ was an app I built (“I” meaning with the help of some engineers) that let’s fashion girlies crowdsource product recommendations in the form of video directly from retailers by simply explaining what they want. It’s essentially a digital version of speaking to someone at the store. There was of course an AI angle to this. The request and recommendation pairs would ultimately become the golden dataset that would lead to a model that could respond to users on behalf of the retailer.

So why didn’t Dice happen? Well, I began fundraising in February 2020 after successfully onboarding 3 boutiques and found myself in a bit of a challenging environment. With such uncertainty around the future of in-store shopping due to Covid, I took a pause and decided to launch a website dedicated to helping users discover all the retailers that I wanted to eventually have on Dice. A sort of “Infatuation for Retail” while I waited out the storm, and this is shopwithdice.com.

The storm continued for another few years as we know, and I eventually decided to grow up and get a real job.

I’ve been at that real job for a little over 4 years now and the storm has certainly passed. Maybe one day I’ll find the energy to give this another go :)

Product management for operational systems

In June of 2015, right after graduating from business school, I landed a job doing product management at arguably the “hottest” startup in New York, a company called Jet.com. As one of the early product leaders at the company, I got the chance to build lots of systems that did lots of different things from scratch. Our team reported into the co-founder and COO Nate Faust, which meant my focus was on building systems that execute, as a opposed to what I do today, which is building interfaces that show the right thing in the right way.

I was thinking about my time there the other day (in the fondest way possible, as this was hands-down the best job I’ve ever had), and realized how dramatically different product management can be depending on the focal area. And while there are aspects of both that are challenging, complex, and creative, working on operational systems is the clear winner in terms of pure left-brain difficulty. It’s a discipline that’s less about understanding the consumer, which requires intuition, empathy, and a general sense of what’s “cool” for users, and more about figuring out, like a puzzle, how to technically get from A to Z and how information moves and changes along the way. In a lot of ways, it’s similar to chaining chemical reactions and there’s usually a right answer.

Some of the systems I worked on include order management, order operations, customer service software including CRMs, phone, chat, and email routing systems, payment and fraud systems, and the most applicable work to what I do today - chatbots, natural language processing, scripting, and automated workflows.

What does it look like?

Building operational systems is all about building systems that deliver something somewhere. And for a PM, that means defining how that happens, which means it usually starts with a detailed map of the how.

For example, for a feature like partial refunds, there are 4 players in the system. The user, the UI and backend of the CRM, and the order management system. Each works to communicate information back and forth across the system until the desired action is accomplished, which in this case, is a portion of the product back to the user in the form of a refund. See figure 1.

Alternatively, for a chat-based experience that offers users a way to use natural language to interact with an AI customer service agent, there are 4+ different players in the system. The chat interface (and user), the chat platform and bot orchestration layer (the quarterback), the NLP layer (where the user’s natural language is converted into “a topic” that can be understood by the rest of the ecosystem), the knowledgebase, a place where content and scripts are stored, and a suite of internal systems that offer APIs to execute actions on behalf of the user (like cancelling an order or requesting a replacement). See figure 2 & 3.

For example, if the user were to say something like:

“I just received a bag of chips that were smashed to pieces”, the chat platform would send this text to the natural language processing layer (NLP) for interpretation. Once it had successfully classified it as the “damaged item” disposition, this would be communicated back to the chat platform as such, which would then trigger a call to the knowledgebase to find the associated script. Once the script had been retrieved, this would then trigger the chat platform to begin execution, which would include a series of functions (leading to various internal API calls) pieced together in the following manner:

Get user to log in

Determine orderId of item(s)

Get items associated with orderIds

Get customer to select damaged item(s)

Get customer to select quantities

Determine actions allowed for specific item(s) - refund without return, refund with return, replacement

Get customer to select desired action

Execute action

While chatbots have come a long way since we built ours, the logic behind agentive software still holds true - allowing users to use conversation to get something done without the interference of a human.

One last thing to note about operational product management. While the “how” is what drives outcomes and innovation, if there is an interface associated with the system, the PM will also work with the UX/design team to determine how all of this comes together for the user. And while this requires some level of intuition and empathy similar to consumer-facing product management, the xPA collaboration and in-depth UXR that is traditionally associated with those systems is at a minimum here, as the main character is the functionality and not how the experience is presented. See figure 4.

So why am I writing about building operational software? Because a) it’s mentally challenging b) it’s under-appreciated c) I miss it and d) it’s been 10 years since we launched Jet and I’m feeling nostalgic.

Figure 1: Partial refunds flow

Figure 2: Chat system

Figure 3: Executable script

Figure 4: How it comes together for the user

Finding the best dishes using R

When I look back on where I was in 2016 - almost 10 years ago - what comes to mind is share houses in the Hamptons, a soon-to-be announced Jet.com acquisition, a 1 bedroom apartment across the Metropolitan Opera House, and random side projects to keep my mind “moving”. Anyone who knows me, knows that I consider the brain a muscle, and every few years, I take on a seemingly-random side project to keep it “in shape”. An unchallenging work environment is my biggest ick.

These projects come in all shapes and sizes. Sometimes they’re creative, like learning how to DJ (thank you, Scratch Academy), sometimes they’re artistic, like the fashion design course I took at Parsons in 2021, and sometimes they’re straight up niche and personal. In 2016, the most important thing in my life was going to good restaurants and making sure I ordered the right thing, so when inspiration hit, I decided to go do something about it.

A bit of context before continuing this story. For my MBA internship, I had accidentally been hired into a data science role without any prior knowledge of R, SQL, or Python. The founder had hired me without really thinking through the actual needs of the team, so a few months later after accepting the role, I showed up and was assigned to one of the other co-founders, a guy named Daniel Brady who needed someone to do hardcore analytics. So, on day one he told me I had 2 weeks to learn R, otherwise, I’d effectively be useless (he was actually a very nice guy, but this was basically the gist of our conversation). So, what did I do? I took those 2 weeks and I learned R. And not only did I use it a lot that summer, I also continued to use it at Jet, and in 2016, I found another way to flex this skill.

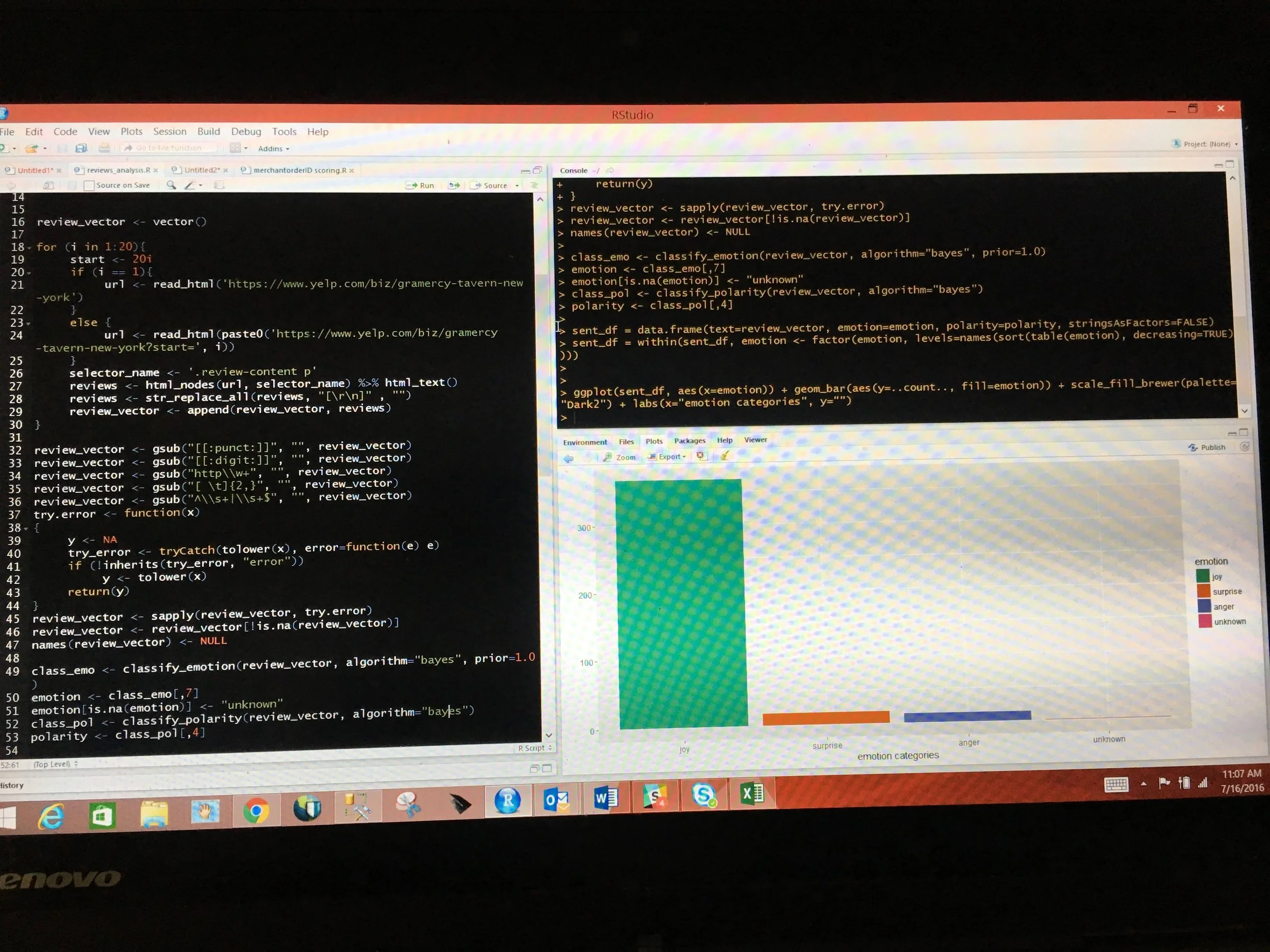

So back to 2016 and wanting to eat the best stuff at the best places. Since R is mostly used for statistical computing, it’s really not a great language for building consumer applications. In fact, it’s really not used for this at all. But since it’s the only language I know, I wanted to make it work and landed on a series of R scripts that I ran ad-hoc when hunger hit.

Let me paint a picture - you’re doing your makeup and blowdrying your hair for a night out and simultaneously pulling up R Studio to run some scripts to figure out where to get the best salmon dish in the West Village. This was the “customer journey” of this product and undoubtedly, this would’ve performed very poorly in UXR.

But, I really wanted this thing to exist, and for most of that year, I used it as my personal food expert.

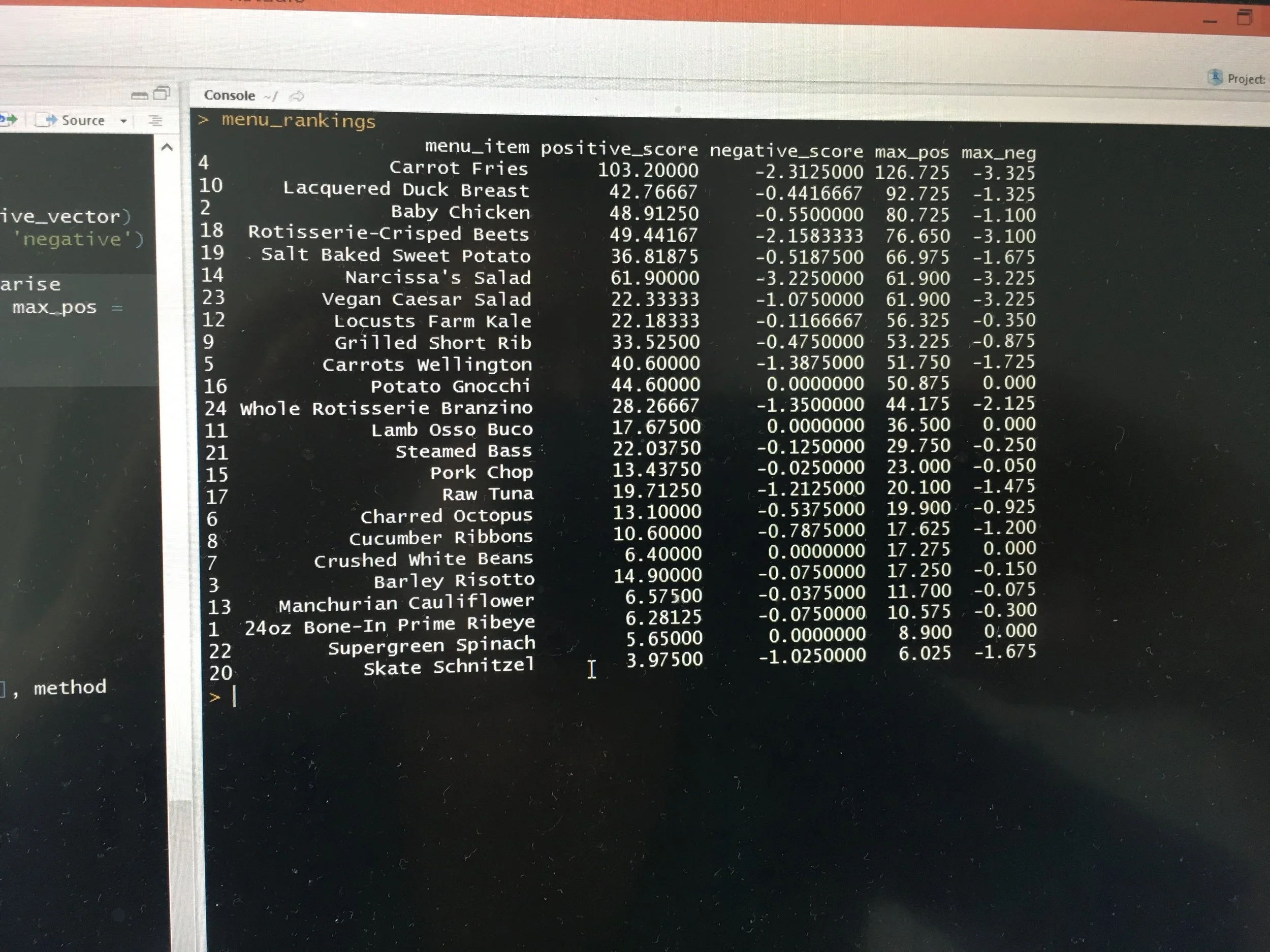

So how did it work? Basically, I crawled the Internet for chatter about each restaurant in the city, analyzed the sentiment of each sentence that contained a mention of a dish (which I cross-referenced against their menus), and then ranked the dishes based on the average positive-ness of the sentences that contained them. I also did this for other types of aspects - whether it was good for a date, for groups, how the service was, the ambiance, etc. - and then extracted out the top positive characteristics and made them searchable. I also normalized the scores so I could compare dishes across restaurants (like the best ranked salmon dish in 10024), and this is how I discovered the Turkish place on the UWS that makes surprisingly good pizza.

I’m pretty sure with some good prompting, you can get ChatGPT (oops, I mean Gemini) to do this for you now, although I do wonder how optimized it is for this specific use case. Either way though, I was very happy to make this a part of my getting ready routine back in 2016, as that was a year of zero regrettable meals.

What is an AI PM?

What is an AI PM? A lazy PM :)

This might be controversial (or maybe not, I don’t really talk about these things with my friends, so I have zero sense of how accepted or fringe my ideas are), but I truly believe that defining products that incorporate LLMs is much easier than building products that don’t. This is mainly for consumer-facing software and not for internal or operational systems FYI.

There’s so much chatter these days about “becoming an AI PM” and how this is a much needed transition for all of us, but when the majority of data that shows on the UI comes from prompting or fine-turning a model versus through a series of complex and lengthy actions that the BE has to take, it feels like, at least on the product management side, we’ve gotten off easy.

Instead of defining how everything functions - the how - requirements are now about what feature is available to the user (assuming the model can just “do the thing”), how to show it, and what that interaction looks like.

An example from my own work. A few years back, I was building a product that could identify “up-and-coming cool brands” by finding patterns across established brands and then using the factors that contributed to something becoming mainstream “cool” to identify others that had the potential to take off. I called this Scout, because it functioned just like a baseball scout.

When writing the requirements for something like this, I had to define what an established fashion brand was, what an “uncool” fashion brand was, how to identify them, how to find patterns, how to weigh the factors, how to calculate the “predictable coolness score”, how to train the model based on some objective source of truth, and how to find the right brands in Google’s corpus.

If I were to build this today, I could probably just use Gemini.

Now, I’m not saying there isn’t work to be done in this new world. There’s a lot around defining the prompts and tons of quality work to be done to ensure the responses are accurate and good (which is not to be assumed), but it does raise question the on what a great PM looks like in the future.

Maybe, product becomes less about getting into the weeds of requirement-writing, defining how data is structured and retrieved, and detailing how things get done, and more about coming up with truly creative use-cases, defining fun and magical user experiences, and having vision.

Maybe we are being forced to become more creative, empathetic, and “right-brained”, as AI begins to take on a role that we were once required to fill.

One last nod to my work building Scout - Doen and Farm Rio were 2 of the brands that my model had identified as “soon to take off” and it’s pretty cool to see it continuing to grow since it was spotted it in early 2022 :)